Back

Poster, Podium & Video Sessions

Video

V03: Urolithasis/Endourology

V03-01: Automated Kidney Stone Segmentation During Ureteroscopy Using Computer Vision Techniques

Friday, May 13, 2022

1:00 PM – 1:10 PM

Location: Video Abstracts Theater

Chase Floyd*, Columbia, SC, Zachary Stoebner, Daiwei Lu, Ipek Oguz, Nicholas Kavoussi, Nashville, TN

- CF

Video Presenter(s)

Introduction: Ureteroscopy is one of the most common procedures performed for the surgical treatment of kidney stones. However, due to the small field of view, visualization can be obscured by blood and debris. Novel machine learning techniques can augment and improve endoscopic visualization. We sought to develop a computer vision method to automatically identify and segment stones during ureteroscopy.

Methods: Twenty separate videos of ureteroscopy using digital ureteroscopes (Karl Storz Flex Xc) were collected (mean duration ± SD, 22±13 seconds). Frames from each video were extracted at 20 frames per second (fps). The training data was manually annotated to identify the stone in each frame. Three established machine learning models, U-Net, U-Net++, and DenseNet, were trained using the annotated frames. We subjected each model to a hyperparameter tuning (i.e. number of epochs, batch size, and learning rate) to compare different iterations of each model for optimization. Eighty percent of the data was used to train the models with 10% for validation and 10% for testing. Outcomes included Dice similarity coefficient (DSC), accuracy (per pixel), and area under the receiver operating characteristic curve (ROC-AUC).

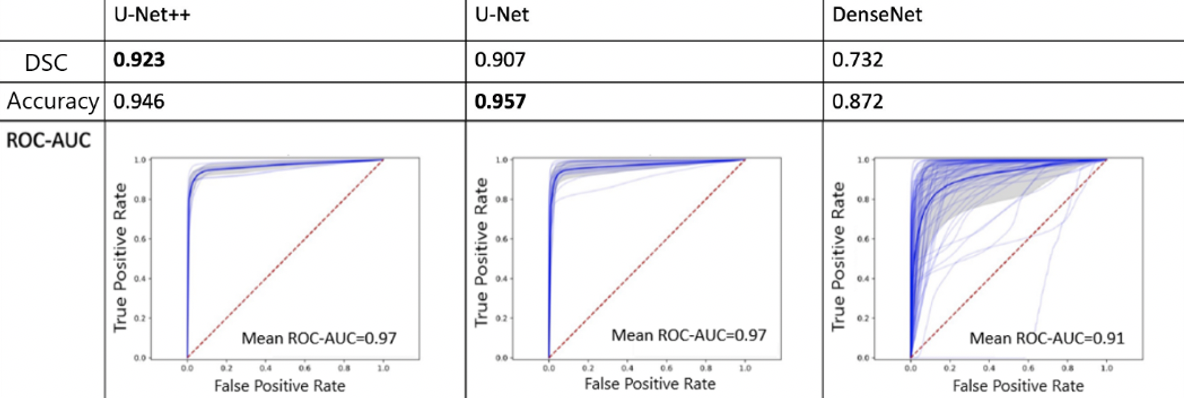

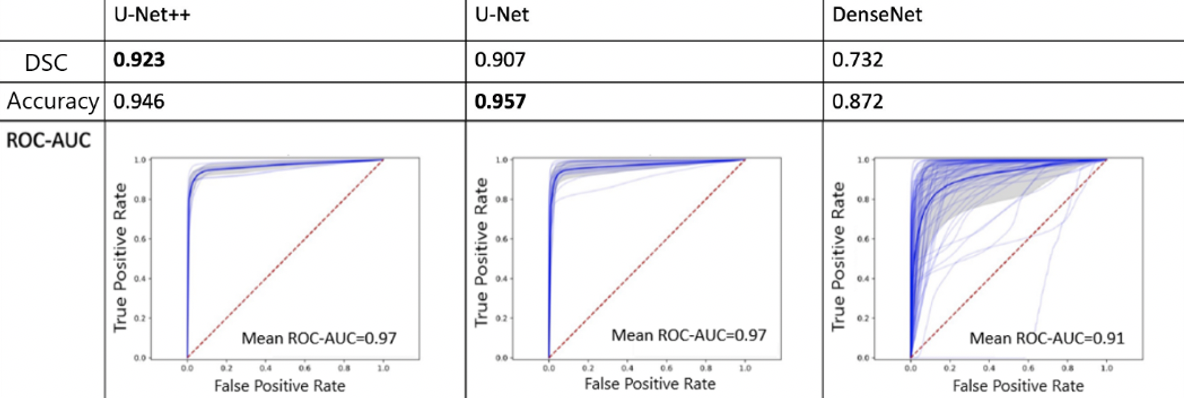

Results: We observed that the U-Net++ models performed the best with mean DSC, accuracy, and ROC-AUC of 0.92., 0.95, 0.97, respectively. This was followed by the U-Net models with DSC, accuracy, and ROC-AUC of 0.91, 0.96, and 0.97, respectively. DenseNet models showed comparably decreased performance (ROC-AUC of 0.91) with a DSC and accuracy of 0.73 and 0.87, respectively (Figure 1). Additionally, we implemented a system for the processing of real-time video feeds with overlayed model predictions. The models were able to annotate new videos at 30 fps and maintain accuracy.

Conclusions: Computer vision models can be sufficiently trained to accurately segment stones during ureteroscopy. The success of the models annotating new videos at 30 frames per second demonstrates the feasibility of their application real-time in the operating room. Further model optimization, as well as the development of an automated, endoscopic navigational system, is ongoing.

Source of Funding: None

Methods: Twenty separate videos of ureteroscopy using digital ureteroscopes (Karl Storz Flex Xc) were collected (mean duration ± SD, 22±13 seconds). Frames from each video were extracted at 20 frames per second (fps). The training data was manually annotated to identify the stone in each frame. Three established machine learning models, U-Net, U-Net++, and DenseNet, were trained using the annotated frames. We subjected each model to a hyperparameter tuning (i.e. number of epochs, batch size, and learning rate) to compare different iterations of each model for optimization. Eighty percent of the data was used to train the models with 10% for validation and 10% for testing. Outcomes included Dice similarity coefficient (DSC), accuracy (per pixel), and area under the receiver operating characteristic curve (ROC-AUC).

Results: We observed that the U-Net++ models performed the best with mean DSC, accuracy, and ROC-AUC of 0.92., 0.95, 0.97, respectively. This was followed by the U-Net models with DSC, accuracy, and ROC-AUC of 0.91, 0.96, and 0.97, respectively. DenseNet models showed comparably decreased performance (ROC-AUC of 0.91) with a DSC and accuracy of 0.73 and 0.87, respectively (Figure 1). Additionally, we implemented a system for the processing of real-time video feeds with overlayed model predictions. The models were able to annotate new videos at 30 fps and maintain accuracy.

Conclusions: Computer vision models can be sufficiently trained to accurately segment stones during ureteroscopy. The success of the models annotating new videos at 30 frames per second demonstrates the feasibility of their application real-time in the operating room. Further model optimization, as well as the development of an automated, endoscopic navigational system, is ongoing.

Source of Funding: None

.jpg)

.jpg)