Back

Poster, Podium & Video Sessions

Moderated Poster

MP41: Surgical Technology & Simulation: Training & Skills Assessment

MP41-03: Computer Vision for Surgical Errors Detection during Robotic Tissue Dissection

Sunday, May 15, 2022

10:30 AM – 11:45 AM

Location: Room 228

Rafal Kocielnik, Inhoo Lee, Pasadena, CA, Jasper Laca*, Sidney I Roberts, Jessica H Nguyen, Los Angeles, CA, Idris O Sunmola, Evanston, IL, De-An Huang, Santa Clara, CA, Sandra P Marshall, Solana Beach, CA, Anima Anandkumar, Pasadena, CA, Andrew J Hung, Los Angeles, CA

Poster Presenter(s)

Introduction: Real-time detection of errors during robot-assisted surgery (RAS) currently requires supervision from experienced surgeons. Herein, we attempt to automate surgical error detection through machine learning (ML)-based computer vision.

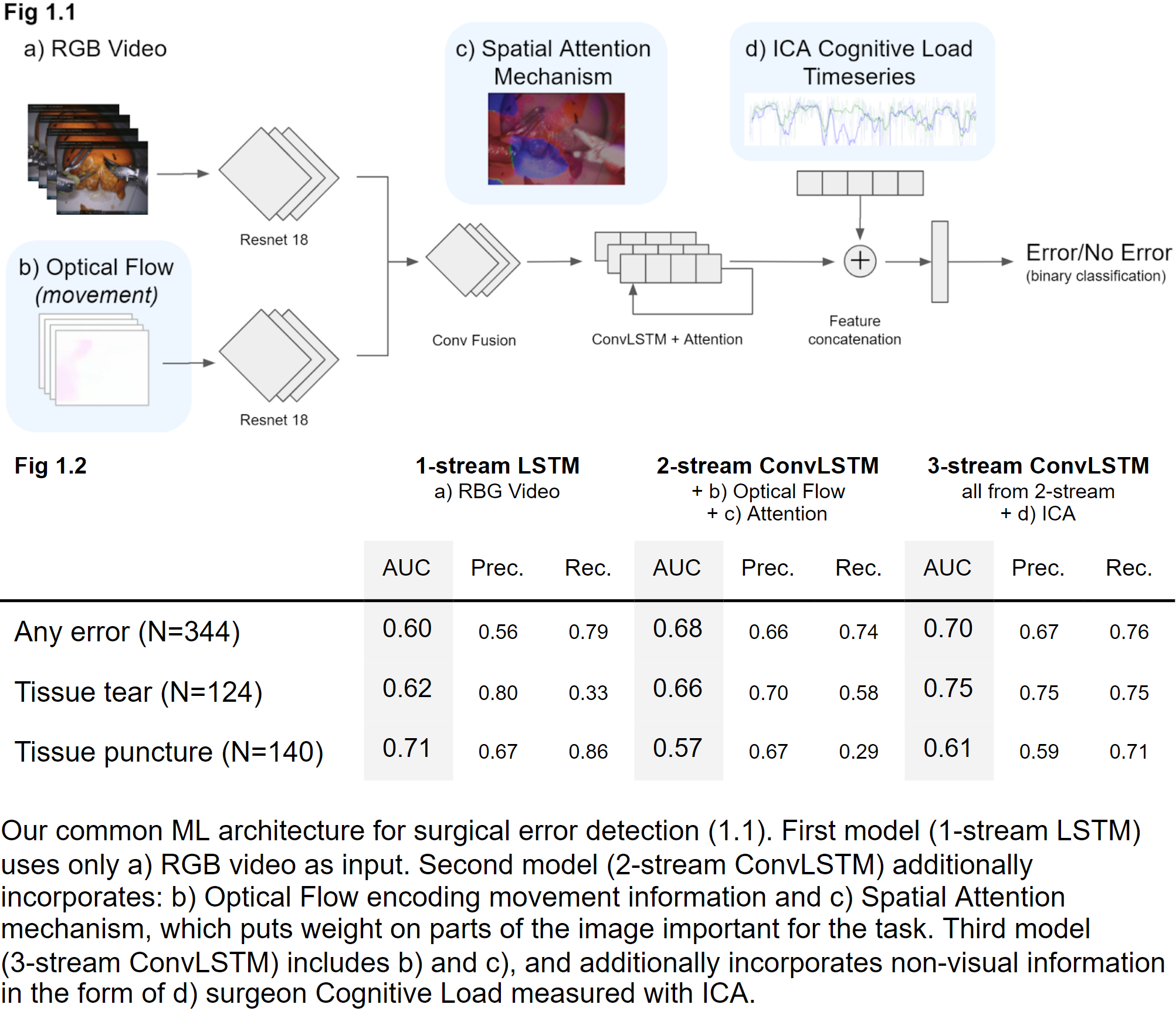

Methods: We use RAS data from 23 surgeons performing a simulated dry-lab tissue dissection task on a live daVinci Xi surgical robot. The data contains video and per-second Index of Cognitive Activity (ICA), derived from pupillary change and tracked by Tobii Eyetrackers. In this data, 172 error instances, representing 11 error types, have been previously annotated. Of these, Tissue tears (n=70, 40.7%) and Tissue punctures (n=62; 36%) are the 2 most common error types. We extract 5 sec windows around an error (positive label) and randomly sample the remaining data (negative label). We train 3 variations of ML architectures on a 80/20 train-test random split stratified by label and participant. Our base 1-stream Long Short-term Memory (LSTM) ML model relies on RGB (red green blue) video input only (Fig 1.1a). Our 2-stream model expands on the base model to include movement information via Optical Flow (Fig 1.1b) and a Spatial Attention Mechanism (Fig 1.1c). Finally, the 3-stream model additionally includes surgeon ICA data (Fig 1.1d).

Results: For detection of “Any error”, additional data (movement+ICA) improve performance from AUC 0.60 (1-stream) to 0.70 (3-stream; Fig 1.2). Between the two most common error types, detection of “Tissue tears” benefits from ICA data, which may indicate the importance of the surgeon's state of mind while committing this type of error. In contrast, detection of “Tissue punctures” degrades from 0.71 to 0.57 with addition of attention mechanism and movement data. This suggests conflicting information added or due to these errors being less visually apparent, which may make it challenging for the ML attention mechanism to identify them.

Conclusions: We have demonstrated the feasibility of ML-based automated error detection in RAS. Our exploration demonstrates the positive value of including additional information in certain tasks. Future work will explore better mechanisms of combining multiple information sources and also expand the setup to detection and possibly prediction of a broader set of errors.

Source of Funding: none

Methods: We use RAS data from 23 surgeons performing a simulated dry-lab tissue dissection task on a live daVinci Xi surgical robot. The data contains video and per-second Index of Cognitive Activity (ICA), derived from pupillary change and tracked by Tobii Eyetrackers. In this data, 172 error instances, representing 11 error types, have been previously annotated. Of these, Tissue tears (n=70, 40.7%) and Tissue punctures (n=62; 36%) are the 2 most common error types. We extract 5 sec windows around an error (positive label) and randomly sample the remaining data (negative label). We train 3 variations of ML architectures on a 80/20 train-test random split stratified by label and participant. Our base 1-stream Long Short-term Memory (LSTM) ML model relies on RGB (red green blue) video input only (Fig 1.1a). Our 2-stream model expands on the base model to include movement information via Optical Flow (Fig 1.1b) and a Spatial Attention Mechanism (Fig 1.1c). Finally, the 3-stream model additionally includes surgeon ICA data (Fig 1.1d).

Results: For detection of “Any error”, additional data (movement+ICA) improve performance from AUC 0.60 (1-stream) to 0.70 (3-stream; Fig 1.2). Between the two most common error types, detection of “Tissue tears” benefits from ICA data, which may indicate the importance of the surgeon's state of mind while committing this type of error. In contrast, detection of “Tissue punctures” degrades from 0.71 to 0.57 with addition of attention mechanism and movement data. This suggests conflicting information added or due to these errors being less visually apparent, which may make it challenging for the ML attention mechanism to identify them.

Conclusions: We have demonstrated the feasibility of ML-based automated error detection in RAS. Our exploration demonstrates the positive value of including additional information in certain tasks. Future work will explore better mechanisms of combining multiple information sources and also expand the setup to detection and possibly prediction of a broader set of errors.

Source of Funding: none

.jpg)

.jpg)