Back

Poster, Podium & Video Sessions

Moderated Poster

MP41: Surgical Technology & Simulation: Training & Skills Assessment

MP41-09: Combining Video and Force Sensor Data within a Multilabel Multimodal Deep Learning Algorithm to Provide Automated Feedback for Robot Assisted Radical Prostatectomy Simulations

Sunday, May 15, 2022

10:30 AM – 11:45 AM

Location: Room 228

Patrick Saba*, Beilei Xu, Rachel Melnyk, Christopher Wanderling, Ahmed Ghazi, Rochester, NY

Patrick Saba, MS,BA

University of Rochester

Poster Presenter(s)

Introduction: Our approach in combining 3D printing and hydrogel casting allows us to create realistic robot assisted radical prostatectomy (RARP) simulations with force sensors embedded into the neurovascular bundles (NVB). We sought to develop an algorithm that combines video and sensor data to provide quantitative and objective feedback by automatically identifying and grading actions performed on a validated RARP simulation.

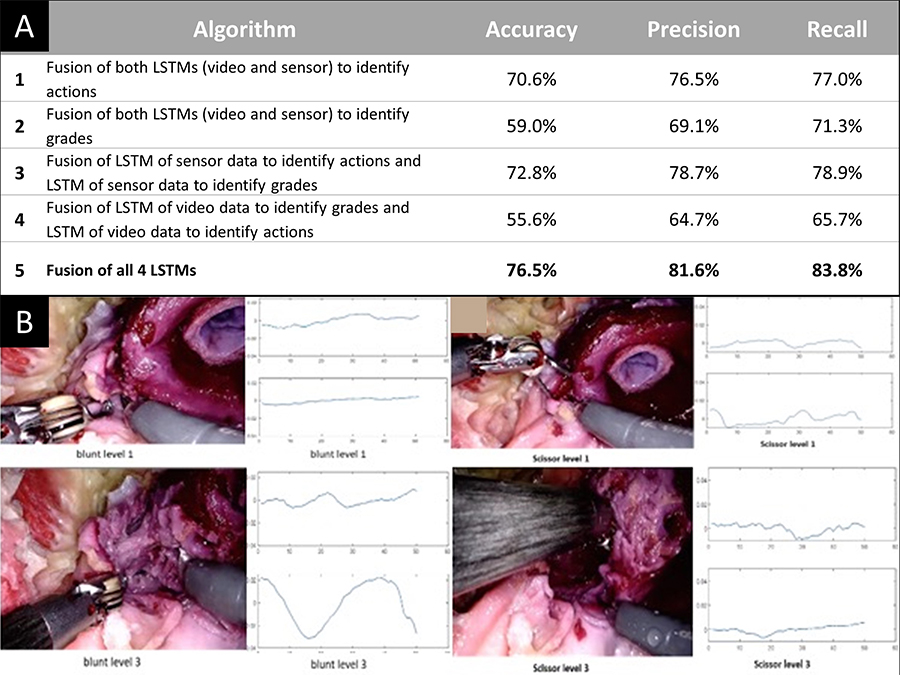

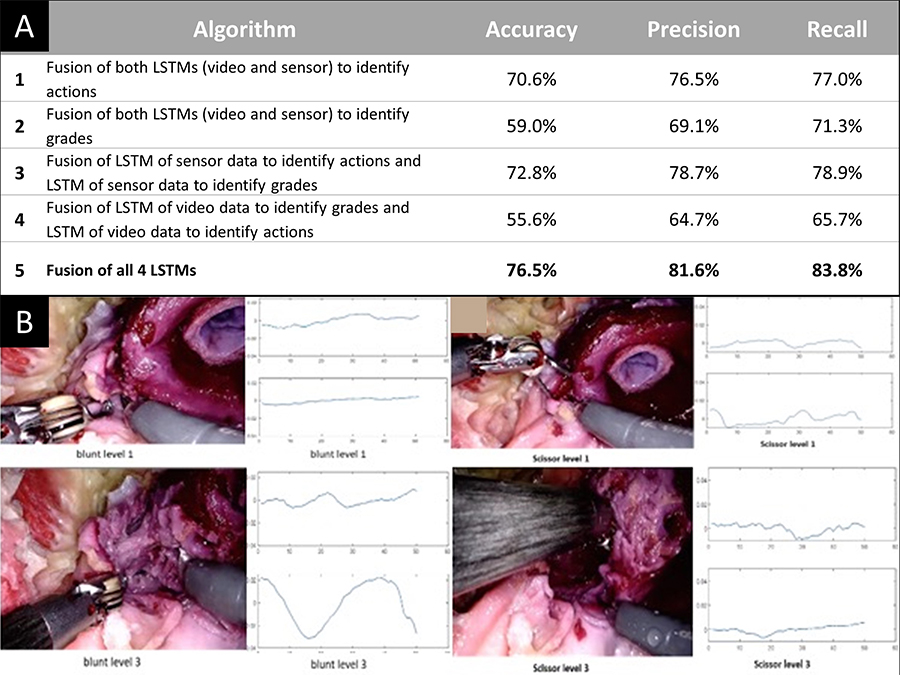

Methods: 531 data points corresponding to instrument interactions with the NVB (scissor cut, sharp dissection, blunt dissection, & traction) were segmented from expert simulations and synced endoscopic video and sensor readings were collected (Fig 1A). A severity grade, ranging from 1 (minimal) to 3 (severe injury), was assigned to each interaction.

80% of segments were chosen to train four Long Short-Term Memory (LSTM) recurrent neural networks. One LSTM was analyzed for each modality (video or sensor) and each task (identification or grading of actions) before a Multilabel Multimodal Deep Learning (MMDL) technique was used to fuse the individual LSTMs into a single algorithm. The remaining 20% were used to evaluate the MMDL combinations.

Results: Sensor and video data analysis alone were limited in their ability to accurately identify surgical actions while also quantifying their respective forces. The MMDLs that combined both data sets into one analyzation system were evaluated for accuracy, precision, and recall, to quantify fully correct, incorrect, or partially correct answers (actions could be correctly identified but simultaneously misrepresent the severity grade) (Fig 1B). Overall, the MMDL combination of all four LSTMs offered the best accuracy, precision, and recall (76.5%, 81.6%, 83.8%, respectively) for determining the action and their severity grade.

Conclusions: MMDLs provide the basis for an automated and accurate method to identify and quantify instrument actions during RARP NVB dissection which can be used to develop procedure specific assessments and feedback for trainees (ex: quantifying positive/negative action occurrences and/or significant forces on the NVB leading to neuropraxia). This approach can ultimately lead to independent training during realistic RARP simulations.

Source of Funding: None

Methods: 531 data points corresponding to instrument interactions with the NVB (scissor cut, sharp dissection, blunt dissection, & traction) were segmented from expert simulations and synced endoscopic video and sensor readings were collected (Fig 1A). A severity grade, ranging from 1 (minimal) to 3 (severe injury), was assigned to each interaction.

80% of segments were chosen to train four Long Short-Term Memory (LSTM) recurrent neural networks. One LSTM was analyzed for each modality (video or sensor) and each task (identification or grading of actions) before a Multilabel Multimodal Deep Learning (MMDL) technique was used to fuse the individual LSTMs into a single algorithm. The remaining 20% were used to evaluate the MMDL combinations.

Results: Sensor and video data analysis alone were limited in their ability to accurately identify surgical actions while also quantifying their respective forces. The MMDLs that combined both data sets into one analyzation system were evaluated for accuracy, precision, and recall, to quantify fully correct, incorrect, or partially correct answers (actions could be correctly identified but simultaneously misrepresent the severity grade) (Fig 1B). Overall, the MMDL combination of all four LSTMs offered the best accuracy, precision, and recall (76.5%, 81.6%, 83.8%, respectively) for determining the action and their severity grade.

Conclusions: MMDLs provide the basis for an automated and accurate method to identify and quantify instrument actions during RARP NVB dissection which can be used to develop procedure specific assessments and feedback for trainees (ex: quantifying positive/negative action occurrences and/or significant forces on the NVB leading to neuropraxia). This approach can ultimately lead to independent training during realistic RARP simulations.

Source of Funding: None

.jpg)

.jpg)