Back

Poster, Podium & Video Sessions

Moderated Poster

MP41: Surgical Technology & Simulation: Training & Skills Assessment

MP41-17: Using a multi-task convolutional neural network to predict surgeon skill in robot-assisted partial nephrectomy

Sunday, May 15, 2022

10:30 AM – 11:45 AM

Location: Room 228

Hal Kominsky*, Yihao Wang, Jessica Dai, Tara Morgan, Alaina Garbens, Jeffrey Gahan, Eric Larson, Dallas, TX

- HK

Hal D. Kominsky, MD

UT Southwestern Medical Center

Poster Presenter(s)

Introduction: Evaluating surgeon skill and proficiency during robotic assisted procedures is a challenging endeavor. Assessment tools must be efficient, reproducible, and scalable. Our group previously described a multi-task convolutional neural network (mtCNN) algorithm that could match human evaluations of performance of an in vitro urethrovesical anastomosis model with 86% accuracy. In this study, we develop and train a similar algorithm to predict surgeon performance of tumor resection and renorrhaphy during robot-assisted partial nephrectomy (RPN). We used GEARS (Global Evaluative Assessment of Robotic Skills), a validated metric, to evaluate performance.

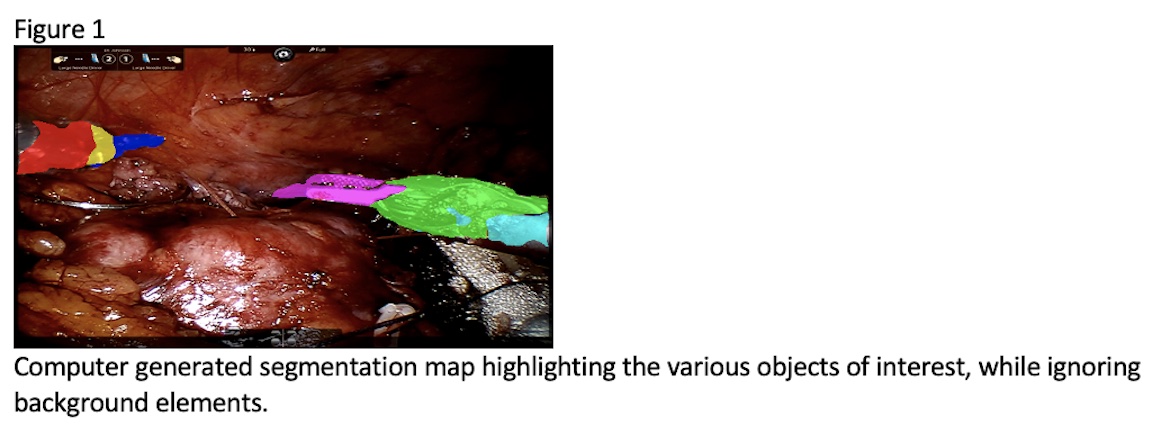

Methods: A total of 133 video clips of surgeons with varying robotic skill levels completing tumor excision and renorrhaphy were utilized. A custom convolutional network architecture was trained to segment surgical instrument types and movements in the procedure including arm flexion, abduction, grasping as well as needle tracking. The model was trained with 872 manually labeled segmentation images. From these segmentations, an array of time-based features were calculated from each segmented frame. These included position and area information, reducing each RPN procedure video to a time-series sequence of values. A composite GEARS score for each video was then calculated using a novel sequence analysis method. Success was defined when the model accurately predicted the domain scores within the range assigned by two human expert reviewers.

Results: Algorithm training for 32 videos (15-45 minute duration) was carried out using a high performance computing cluster with shared GPUs. The algorithm successfully matched two sets of human expert reviewers for each video across the GEARS domains with the following accuracy: Depth perception (69%), bimanual dexterity (65%), efficiency (43%), force sensitivity (84%), autonomy (47%), robotic control (47%).

Conclusions: We applied our previously described multi-task deep learning algorithm to robot-assisted partial nephrectomy videos that tracts instruments and evaluates proficiency. The model was successful at matching human generated scores but was less accurate than our previously described work on an in vitro task.

Source of Funding: None

Methods: A total of 133 video clips of surgeons with varying robotic skill levels completing tumor excision and renorrhaphy were utilized. A custom convolutional network architecture was trained to segment surgical instrument types and movements in the procedure including arm flexion, abduction, grasping as well as needle tracking. The model was trained with 872 manually labeled segmentation images. From these segmentations, an array of time-based features were calculated from each segmented frame. These included position and area information, reducing each RPN procedure video to a time-series sequence of values. A composite GEARS score for each video was then calculated using a novel sequence analysis method. Success was defined when the model accurately predicted the domain scores within the range assigned by two human expert reviewers.

Results: Algorithm training for 32 videos (15-45 minute duration) was carried out using a high performance computing cluster with shared GPUs. The algorithm successfully matched two sets of human expert reviewers for each video across the GEARS domains with the following accuracy: Depth perception (69%), bimanual dexterity (65%), efficiency (43%), force sensitivity (84%), autonomy (47%), robotic control (47%).

Conclusions: We applied our previously described multi-task deep learning algorithm to robot-assisted partial nephrectomy videos that tracts instruments and evaluates proficiency. The model was successful at matching human generated scores but was less accurate than our previously described work on an in vitro task.

Source of Funding: None

.jpg)

.jpg)