Back

Poster, Podium & Video Sessions

Podium

PD30: Education Research III

PD30-08: Urologic Trainee Surgical Assessment Tools: Seeking Standardization

Saturday, May 14, 2022

2:10 PM – 2:20 PM

Location: Room 243

Lauren Conroy*, Kyle Blum, Hannah Slovacek, Phillip Mann, Steven Canfield, Houston, TX

- LC

Podium Presenter(s)

Introduction: Assessing a urology trainees’ performance is critical to evaluating longitudinal progress toward surgical autonomy. Presently, there is wide variability in surgical assessment tools used by training programs. We aim to critically analyze the available tools in urologic surgery and assess their validity, reliability, and feasibility in an effort to identify features that may lead to a more standardized assessment pathway.

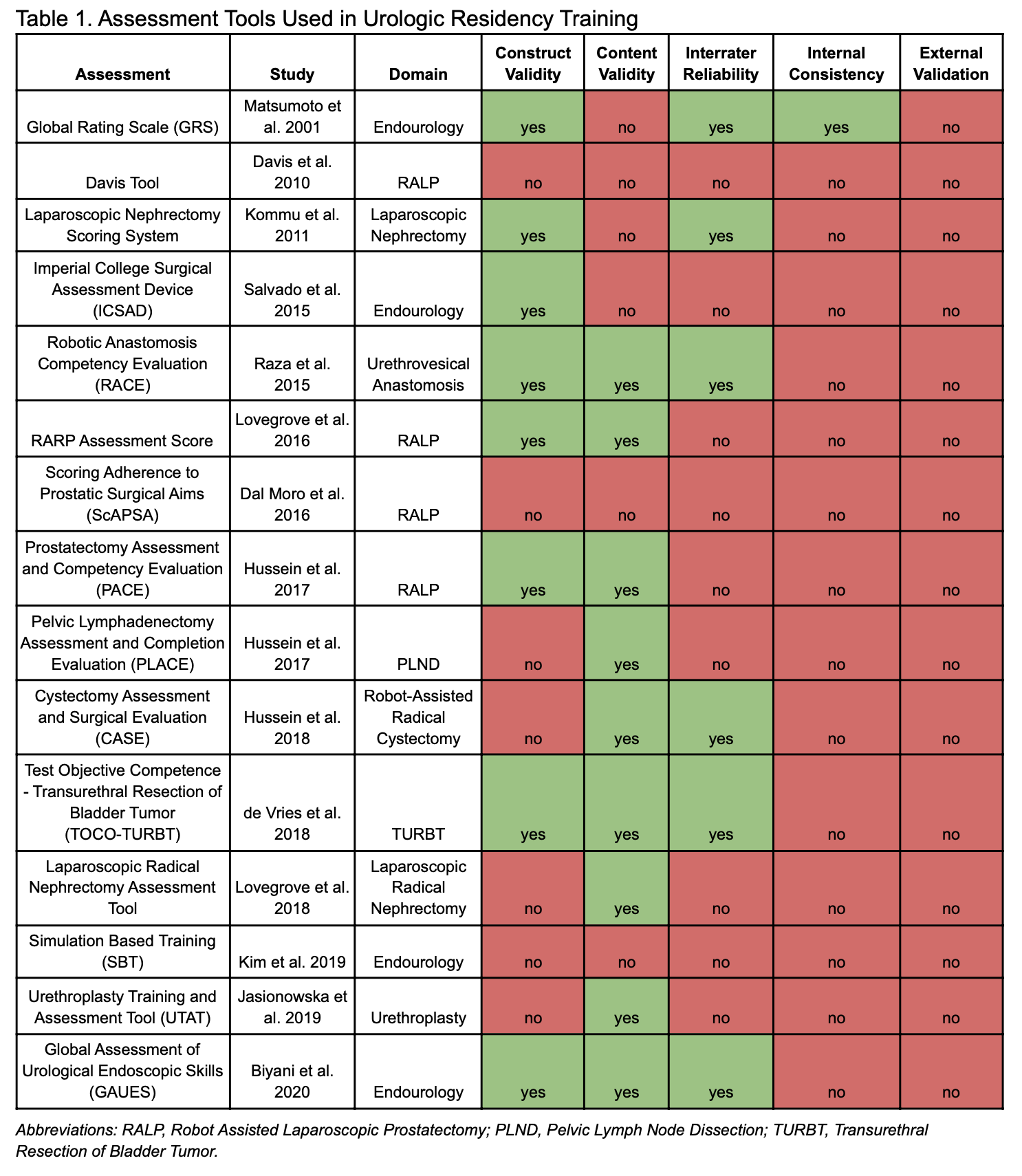

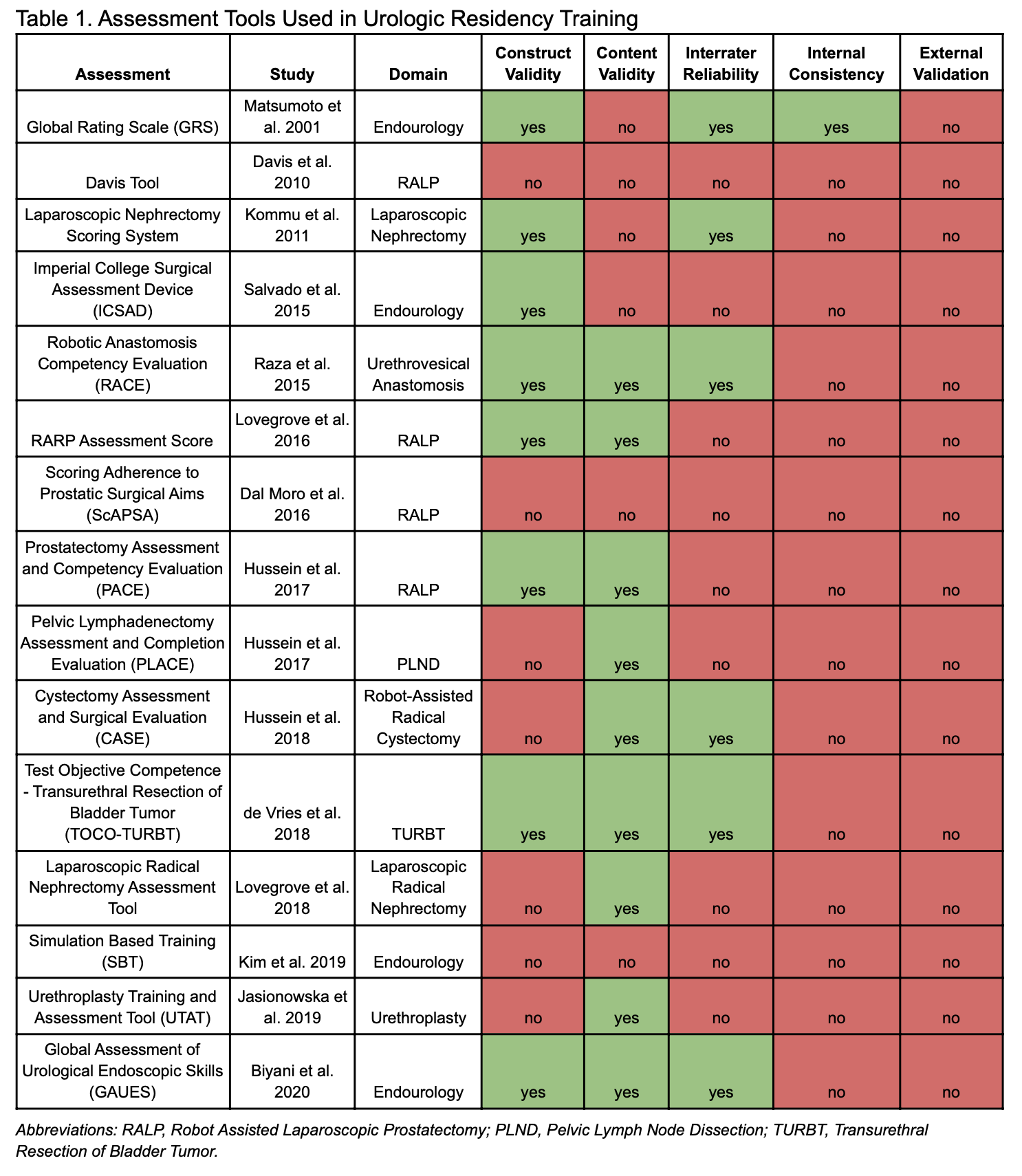

Methods: The primary literature was reviewed to identify published surgical assessment tools within the past 20 years. Assessments specific to urologic training were included for final review. Each tool was assessed based on its ability to identify performance differences between participants with varying experience (construct validity), its ability to measure the behavior of which it intended to (content validity), if there was agreement amongst rater’s scores (interrater reliability), if there was correlation between individual rater’s scores and overall scores (internal consistency), and if it had been externally validated.

Results: Thirty surgical assessment tools were identified, 15 were specific to Urology, Table 1. Of these, six (40.0%) assessed surgical simulations (e.g. robotic trainer) and nine (60.0%) were designed to provide real-time feedback in the operating room. Twelve (80.0%) had some form of validity; eight (53.3%) had construct validity, nine (60.0%) had content validity, and five (33.3%) had both construct and content validity. Six (40.0%) of the tools were significantly reliable, with six (40.0%) demonstrating at least moderate interrater reliability for all of the tool’s components and one showing at least acceptable internal consistency. No tool was externally validated.

Conclusions: There is high variability between available urologic trainee assessment tools. While 15 tools were identified, each had varying degrees of internal validity, and none had been externally validated. This lack of external validity creates the possibility of large inter-assessment variability between tools and invites evaluation discordance between training programs. There is an unmet need for a standardized surgical assessment tool to be incorporated into AUA training programs that is both internally consistent, reliable, and externally valid.

Source of Funding: None.

Methods: The primary literature was reviewed to identify published surgical assessment tools within the past 20 years. Assessments specific to urologic training were included for final review. Each tool was assessed based on its ability to identify performance differences between participants with varying experience (construct validity), its ability to measure the behavior of which it intended to (content validity), if there was agreement amongst rater’s scores (interrater reliability), if there was correlation between individual rater’s scores and overall scores (internal consistency), and if it had been externally validated.

Results: Thirty surgical assessment tools were identified, 15 were specific to Urology, Table 1. Of these, six (40.0%) assessed surgical simulations (e.g. robotic trainer) and nine (60.0%) were designed to provide real-time feedback in the operating room. Twelve (80.0%) had some form of validity; eight (53.3%) had construct validity, nine (60.0%) had content validity, and five (33.3%) had both construct and content validity. Six (40.0%) of the tools were significantly reliable, with six (40.0%) demonstrating at least moderate interrater reliability for all of the tool’s components and one showing at least acceptable internal consistency. No tool was externally validated.

Conclusions: There is high variability between available urologic trainee assessment tools. While 15 tools were identified, each had varying degrees of internal validity, and none had been externally validated. This lack of external validity creates the possibility of large inter-assessment variability between tools and invites evaluation discordance between training programs. There is an unmet need for a standardized surgical assessment tool to be incorporated into AUA training programs that is both internally consistent, reliable, and externally valid.

Source of Funding: None.

.jpg)

.jpg)