Back

Ignite Talk

Session: Ignite Session 1B

1291: Development and Pilot Implementation of a Predictive Model to Identify Visits Appropriate for Telehealth

Saturday, November 12, 2022

1:25 PM – 1:30 PM Eastern Time

Location: Center City Stage

.png)

David Leverenz, MD

Duke University

Durham, NC, United StatesDisclosure: Disclosure information not submitted.

Ignite Speaker(s)

david leverenz1, Mary Solomon1, Nicoleta Economau-Zavlanos1, Bhargav Srinivas Adagarla2, Theresa Coles1, Isaac Smith3, Robert Overton1, Catherine Howe3, Jayanth Doss4, Ricardo Henao1 and Megan Clowse4, 1Duke University School of Medicine, Durham, NC, 2Duke Clinical Research Institute, Durham, NC, 3Duke University Hospital, Durham, NC, 4Duke University, Durham, NC

Background/Purpose: Since January 2021, providers in our practice have used the Encounter Appropriateness Score for You (EASY) to document their perception of the appropriateness of telehealth or in-person care after every outpatient rheumatology encounter. In this project, we report the development of a predictive model that uses EASY scores and other variables to identify future encounters that could be done by telehealth. We then demonstrate the utility of this model through a pilot implementation.

Methods: The EASY score asks providers to rate each in-person and telehealth encounter as follows: "Which of the following encounter types would have been the most appropriate for today's visit? (irrespective of the pandemic)." 1 = Either in-person or telehealth acceptable, 2 = in-person preferred, 3 = telehealth preferred. The predictive model was developed using a 70/30 partition of training/test sets of outpatient rheumatology encounters in our practice with EASY scores from 1/1/21 – 12/31/21. The model used logistic regression to predict the outcome of a future encounter's EASY score with "in-person preferred as the control class (0) and either in-person or telehealth acceptable and telehealth preferred as the class of interest (1). A validation set of encounters from January 2022 assessed model performance in comparison to the test set. To pilot the model, we presented 3 providers with future visits from 3/1/22 – 5/31/22 that the model identified as candidates for a switch in encounter type, along with an equal number of randomly selected fake encounter switch predictions. The providers could accept or reject the proposed change in encounter type, and they were blinded as to whether the proposed changes were true or fake. Cohen's kappa was used to measure concordance between the true versus fake predictions and providers' acceptance of those predictions.

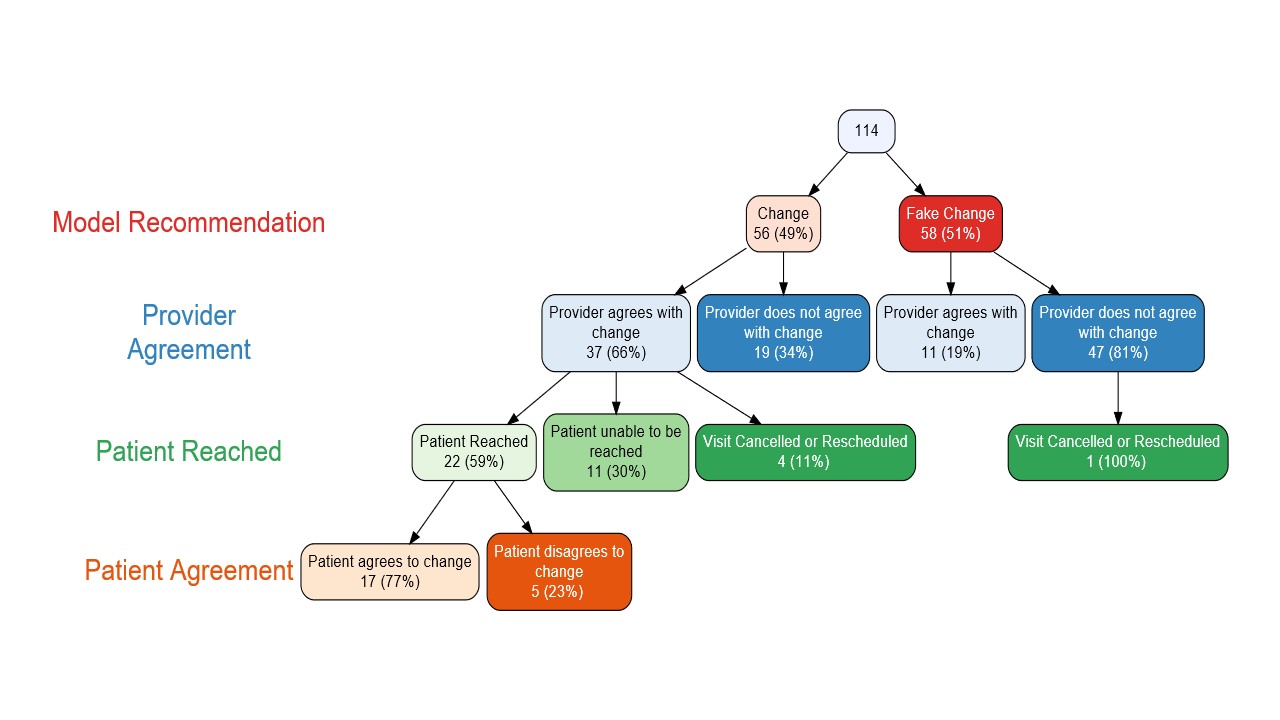

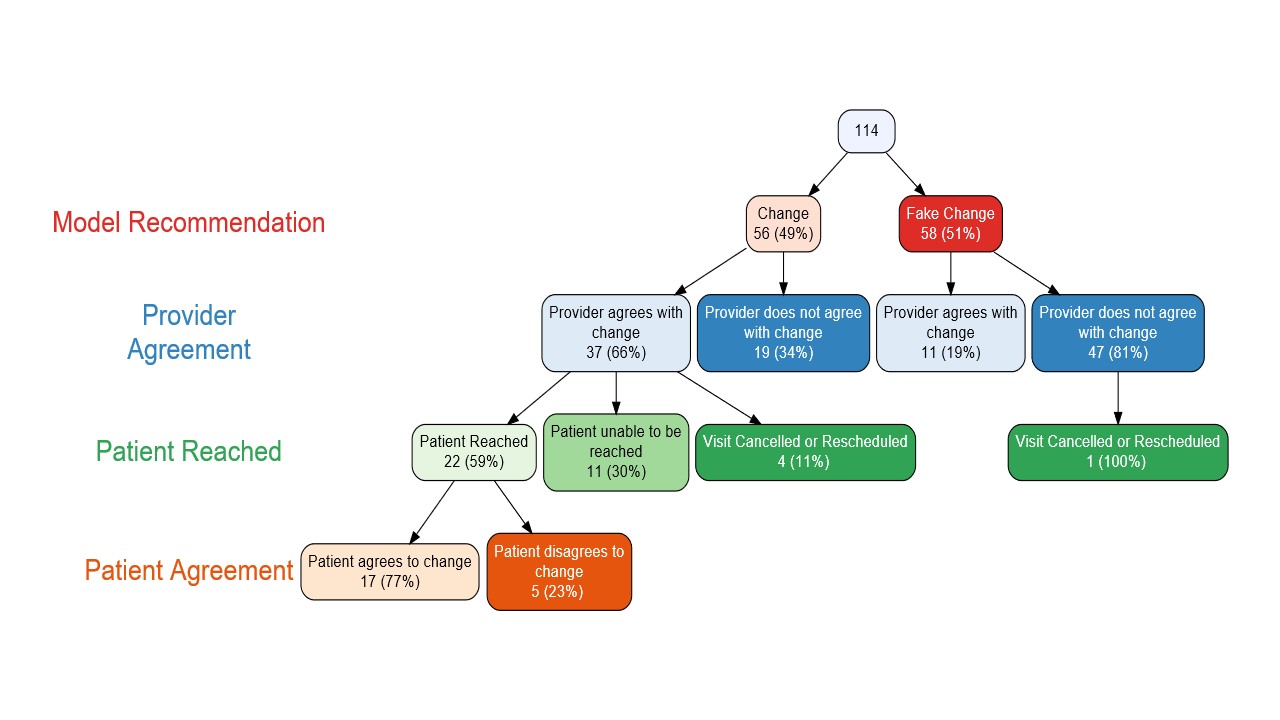

Results: During model development, the training and test sets included 10,551 and 4,541 encounters, respectively. The model AUC was 0.831. We set the model's operational point at a positive predictive value (PPV) of 0.80 for correctly identifying visits appropriate for telehealth with a sensitivity of 0.384; this was better than existing practice, which had a PPV of 0.766 at the same sensitivity (Figure 1). The validation set of 1,244 encounters showed similar performance. Significant contributors to the model included patient age, diagnoses, medications, RAPID3 scores, and provider preference for telehealth (Table 1). The pilot implementation included 114 encounters. Providers accepted 37/56 (66%) of the true predicted changes compared to 11/58 (19%) of the fake predictions; standard Cohen's kappa = 0.4722, p < 0.001 (Figure 2). Of the patients who were offered a change, 17/22 (77%) agreed.

Conclusion: By measuring provider perceptions of telehealth appropriateness in every visit, we are able to predict ahead of time visits that could be done by telehealth. When providers and patients were offered these changes, the majority agreed. Thus, this predictive model has the potential to expand access to telehealth care to patients for whom it is appropriate.

Figure 1: Predictive model Receiver Operating Characteristic (ROC), showing model Area Under the Curve (AUC) and the sensitivity / specificity at the operational point, as compared to current practice without the model.

Figure 1: Predictive model Receiver Operating Characteristic (ROC), showing model Area Under the Curve (AUC) and the sensitivity / specificity at the operational point, as compared to current practice without the model.

Table 1: Clinical and visit-related variables contributing to predictive model performance. Positive coefficients indicate higher log odds of a visit being appropriate for telehealth, and negative coefficients indicate a lower log odds. (*) indicates significant contributors at p < 0.05. DMARD = disease modifying anti-rheumatic drug, cs = conventional synthetic, b = biologic, ts = targeted synthetic.

Table 1: Clinical and visit-related variables contributing to predictive model performance. Positive coefficients indicate higher log odds of a visit being appropriate for telehealth, and negative coefficients indicate a lower log odds. (*) indicates significant contributors at p < 0.05. DMARD = disease modifying anti-rheumatic drug, cs = conventional synthetic, b = biologic, ts = targeted synthetic.

Figure 2: Flowchart of pilot implementation among 3 providers, showing provider agreement with true versus fake model recommendations, as well as patient acceptance of those changes. Patients were not offered a change if the original prediction was a fake prediction.

Figure 2: Flowchart of pilot implementation among 3 providers, showing provider agreement with true versus fake model recommendations, as well as patient acceptance of those changes. Patients were not offered a change if the original prediction was a fake prediction.

Disclosures: d. leverenz, Pfizer, Rheumatology Research Fund; M. Solomon, None; N. Economau-Zavlanos, Pfizer, National Institute of Neurologic Disorders and Stroke, BEIGENE; B. Srinivas Adagarla, None; T. Coles, Pfizer, Regenxbio, Merck/MSD; I. Smith, None; R. Overton, None; C. Howe, None; J. Doss, Pfizer; R. Henao, None; M. Clowse, Exagen.

Background/Purpose: Since January 2021, providers in our practice have used the Encounter Appropriateness Score for You (EASY) to document their perception of the appropriateness of telehealth or in-person care after every outpatient rheumatology encounter. In this project, we report the development of a predictive model that uses EASY scores and other variables to identify future encounters that could be done by telehealth. We then demonstrate the utility of this model through a pilot implementation.

Methods: The EASY score asks providers to rate each in-person and telehealth encounter as follows: "Which of the following encounter types would have been the most appropriate for today's visit? (irrespective of the pandemic)." 1 = Either in-person or telehealth acceptable, 2 = in-person preferred, 3 = telehealth preferred. The predictive model was developed using a 70/30 partition of training/test sets of outpatient rheumatology encounters in our practice with EASY scores from 1/1/21 – 12/31/21. The model used logistic regression to predict the outcome of a future encounter's EASY score with "in-person preferred as the control class (0) and either in-person or telehealth acceptable and telehealth preferred as the class of interest (1). A validation set of encounters from January 2022 assessed model performance in comparison to the test set. To pilot the model, we presented 3 providers with future visits from 3/1/22 – 5/31/22 that the model identified as candidates for a switch in encounter type, along with an equal number of randomly selected fake encounter switch predictions. The providers could accept or reject the proposed change in encounter type, and they were blinded as to whether the proposed changes were true or fake. Cohen's kappa was used to measure concordance between the true versus fake predictions and providers' acceptance of those predictions.

Results: During model development, the training and test sets included 10,551 and 4,541 encounters, respectively. The model AUC was 0.831. We set the model's operational point at a positive predictive value (PPV) of 0.80 for correctly identifying visits appropriate for telehealth with a sensitivity of 0.384; this was better than existing practice, which had a PPV of 0.766 at the same sensitivity (Figure 1). The validation set of 1,244 encounters showed similar performance. Significant contributors to the model included patient age, diagnoses, medications, RAPID3 scores, and provider preference for telehealth (Table 1). The pilot implementation included 114 encounters. Providers accepted 37/56 (66%) of the true predicted changes compared to 11/58 (19%) of the fake predictions; standard Cohen's kappa = 0.4722, p < 0.001 (Figure 2). Of the patients who were offered a change, 17/22 (77%) agreed.

Conclusion: By measuring provider perceptions of telehealth appropriateness in every visit, we are able to predict ahead of time visits that could be done by telehealth. When providers and patients were offered these changes, the majority agreed. Thus, this predictive model has the potential to expand access to telehealth care to patients for whom it is appropriate.

Figure 1: Predictive model Receiver Operating Characteristic (ROC), showing model Area Under the Curve (AUC) and the sensitivity / specificity at the operational point, as compared to current practice without the model.

Figure 1: Predictive model Receiver Operating Characteristic (ROC), showing model Area Under the Curve (AUC) and the sensitivity / specificity at the operational point, as compared to current practice without the model. Table 1: Clinical and visit-related variables contributing to predictive model performance. Positive coefficients indicate higher log odds of a visit being appropriate for telehealth, and negative coefficients indicate a lower log odds. (*) indicates significant contributors at p < 0.05. DMARD = disease modifying anti-rheumatic drug, cs = conventional synthetic, b = biologic, ts = targeted synthetic.

Table 1: Clinical and visit-related variables contributing to predictive model performance. Positive coefficients indicate higher log odds of a visit being appropriate for telehealth, and negative coefficients indicate a lower log odds. (*) indicates significant contributors at p < 0.05. DMARD = disease modifying anti-rheumatic drug, cs = conventional synthetic, b = biologic, ts = targeted synthetic. Figure 2: Flowchart of pilot implementation among 3 providers, showing provider agreement with true versus fake model recommendations, as well as patient acceptance of those changes. Patients were not offered a change if the original prediction was a fake prediction.

Figure 2: Flowchart of pilot implementation among 3 providers, showing provider agreement with true versus fake model recommendations, as well as patient acceptance of those changes. Patients were not offered a change if the original prediction was a fake prediction. Disclosures: d. leverenz, Pfizer, Rheumatology Research Fund; M. Solomon, None; N. Economau-Zavlanos, Pfizer, National Institute of Neurologic Disorders and Stroke, BEIGENE; B. Srinivas Adagarla, None; T. Coles, Pfizer, Regenxbio, Merck/MSD; I. Smith, None; R. Overton, None; C. Howe, None; J. Doss, Pfizer; R. Henao, None; M. Clowse, Exagen.